As Tesla’s FSD is still not officially launched in the Chinese market, “non-car manufacturer” Huawei seems to have taken over the relay baton of intelligent driving topics in China. Injecting a high-tech “poetry and distance” into the increasingly fierce price war in the Chinese automotive industry. In early November, Huawei’s Executive Director Yu Chengdong and Xiaopeng Motors CEO He Xiaopeng’s remote dialogue on social media once again sparked widespread attention to the AEB automatic emergency braking system, and the topic went viral.

On November 26, Huawei’s car BU officially announced the establishment of a new company, which will introduce investment from Changan Automobile and its affiliated companies, with a shareholding ratio not exceeding 40%. Reports also indicate that Huawei is in talks with several investors such as FAW and Dongfeng, and the valuation of the new company may reach as high as 250 billion yuan.

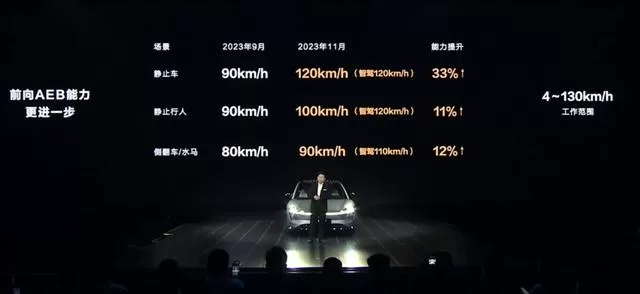

On November 28, Huawei’s first all-electric car, the Zhiji S7, was officially released. The new car, created in collaboration with Chery, is equipped with a laser radar and Huawei’s ADS 2.0 advanced intelligent driving system, just like the modified Zhiji series models. At the launch event, a Zhiji S7 appeared on stage in autonomous driving mode through remote summoning. The official also demonstrated a video of the Zhiji S7’s autonomous parking and valet driving, supporting over 160 parking scenarios including mechanical parking assistance. It was announced that the NCA intelligent driving assistance program will be open to the public nationwide by the end of 2023, and the Zhiji S7’s forward AEB now supports active braking when encountering a stationary vehicle at speeds of up to 120 km/h.

With the rise of Tesla and new car manufacturers, digital consumer goods companies such as Huawei and Xiaomi have entered the automotive race. Intelligent competition has become an important area of electric vehicle competition, and autonomous driving technology is clearly the focus of each manufacturer. Controversy behind AEB: The ambiguity between L2.9 and L3 In recent years, after Musk continued to build momentum for Tesla FSD on overseas social media, domestic new forces have been promoting laser radar, navigation assistance, and other autonomous driving software and hardware as brand labels. “Autonomous driving” in the passenger car field seems to be in a state of “Schrodinger’s cat”: On the one hand, various new functions and concepts emerge endlessly, and in the manufacturers’ public relations propaganda, it seems that before long, human drivers will be able to hand over control of the steering wheel to the system and achieve complete “autonomous driving.” On the other hand, the penetration rate of intelligent driving systems such as NOA high-speed navigation has not significantly increased in the actual market performance and has not gained widespread consumer acceptance. After several related traffic accidents occurred in China, in order to avoid causing incorrect user perceptions, major car companies have also changed the “autonomous driving” in their promotional statements to “advanced intelligent driving” and “advanced assisted driving,” emphasizing “assistance” rather than “automatic.” To clarify the relevant concepts, we still need to review the classification of autonomous driving functions proposed by the International Association of Automotive Engineers. For a clear and concise explanation, refer to the diagram below.

A simple way to distinguish different levels of autonomy is to look at the hands holding the steering wheel in the picture: up to Level 2, the driver must keep their hands on the wheel at all times, with driving responsibility on the human driver; starting from Level 3, the driver is allowed to take their hands off the wheel to a certain extent, and driving responsibility gradually shifts to the vehicle and the manufacturer. In other words, according to this standard, there is currently no quantifiable system performance parameter from Level 2 “assisted driving” to Level 3 “autonomous driving.” It is more about whether the responsibility for accidents lies with the driver or the car manufacturer. In reality, we see a mismatch between system performance and level: In May 2022, Mercedes-Benz announced the world’s first Level 3 autonomous driving system, Drive Pilot, in Germany. The system allows for activation on German highways under certain conditions, with a speed limit of less than 60km/h and without the ability to change lanes autonomously. However, the driver is allowed to take their hands off the wheel and engage in appropriate entertainment activities, with Mercedes-Benz assuming responsibility in the event of an accident.

In China, manufacturers like Huawei and Weixiaoli have already implemented NOA high-speed navigation assistance, which can be activated at speeds of 120km/h. The system allows the vehicle to automatically change lanes, overtake, and navigate ramps according to the navigation route. However, drivers are still required to hold the steering wheel at all times. The system is classified as L2, L2+, and in some cases, exaggerated as L2.99999…

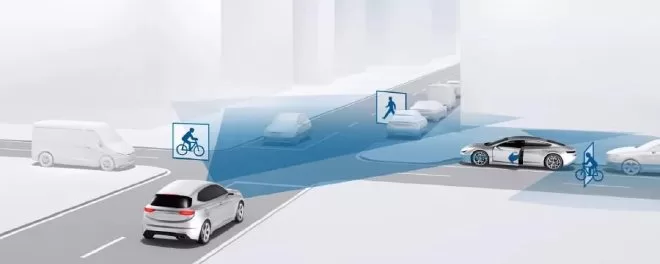

Just like human drivers learning to drive, passing the test and getting a license doesn’t mean they can become experienced drivers without accidents. Even though the capabilities of intelligent driving systems can meet the requirements for “autonomous driving” in some scenarios, it doesn’t mean that safety issues won’t arise. Whether it’s maintaining the corporate image of the car company or from the fundamental perspective of traffic safety, today’s intelligent driving systems still cannot make the leap from L2.9 to L3. It’s impossible to determine superiority or inferiority in terms of levels. Intelligent driving is still a “heavy experience, weak parameter” system – functions such as high-speed navigation and autonomous parking tend to be homogeneous. Whether the system is good or not still needs to be experienced by the driver during the driving process, and car companies also find it difficult to find differentiated descriptions in their promotional activities. Therefore, manufacturers have found a relatively “traditional” but easily comparable function like AEB. The automatic emergency braking (AEB) system is based on perception devices such as cameras and millimeter-wave radar installed on the vehicle, which can identify the risk of collision with other traffic participants ahead, and implement active safety functions by automatically triggering the braking system. In simpler terms, AEB means that in extreme conditions, when human drivers, for various reasons, fail to see pedestrians or vehicles ahead and an accident is about to occur, the vehicle can brake on its own to avoid a collision.

AEB belongs to the L1 level of advanced driving assistance systems, a relatively mature technology with standardized testing criteria. Unlike AI-based autonomous driving, mainstream AEB systems currently rely on manually programmed decision logic, triggering braking only in specific conditions such as obstacles at certain speeds. This is why many vehicles achieve five-star ratings in standard tests but still struggle to stop in real-world driving or non-standard test environments.

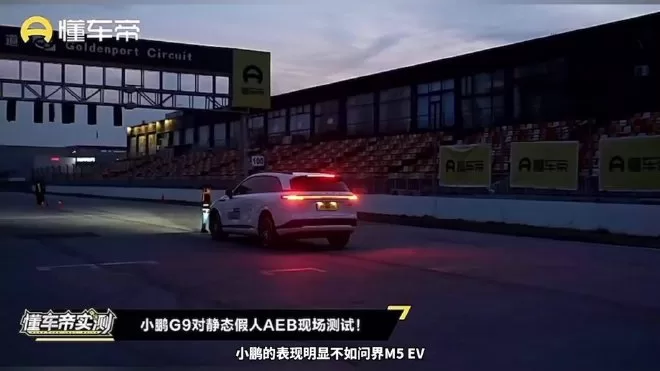

Putting aside the overall traffic environment, only pursuing the AEB single function to be activated at a faster speed is not in line with real driving behavior. A more natural intelligent driving system should be able to perceive environmental information such as obstacles ahead, and take timely actions such as deceleration and lane change. In the analysis of the debate between Yu Chengdong and He Xiaopeng on AEB, many also mentioned the issues of “misfiring” and “missing firing.” Since AEB is only a “bottoming out” technology in extreme traffic scenarios, the main responsibility for driving still lies with human drivers. Compared to “missing firing,” avoiding overly sensitive “misfiring” is more important – after all, in the vast majority of cases, human drivers will perceive risks in a timely manner and avoid collision accidents, while overly sensitive AEB leading to “ghost braking” situations will actually bring more rear-end hazards. In short, it is difficult to cross the grading from L2 to L3, and the experience of intelligent driving is difficult to compare. The relatively objective “hard indicator” of how fast the AEB system can stop seems to have appeared more and more in the press conferences of car manufacturers starting from Huawei. This is like the “benchmarking” segment that used to be popular at the smartphone industry’s press conferences, attracting attention and traffic, but how much value and significance does it really have? The high-level intelligent driving is still far from the “iPhone 4 moment” Xiaopeng Motors, with intelligent driving as a brand label, launched the new car Xiaopeng G6 this year. At the time, this new car was called “Xiaopeng Motors’ iPhone 4” by He Xiaopeng, hoping to achieve the same epoch-making significance as Apple’s iPhone 4 in the smartphone industry. As the year-end approaches, although the Xiaopeng G6 has sold over 8,000 units for two consecutive months, it has ultimately failed to become a true “explosive model” in the real sense.

This is like a reflection of the reality of advanced intelligent driving. Although it is frequently highlighted as a key technology at major manufacturers’ press conferences, the market response and actual implementation still face a “difficult to sell” situation. Looking back at the hot keywords in the domestic intelligent driving industry in 2023, we can summarize the following key points, perhaps containing a “qualitative change” moment: Competing in intelligent driving “opening up the city” After high-speed navigation and valet parking are no longer new, domestic manufacturers’ competition in intelligent driving has turned to urban areas. In 2023, almost all the leading players participating in the intelligent car competition in China have released urban NOA navigation assistance functions, which support point-to-point assisted driving on city roads according to the navigation route. In March of this year, Xiaopeng fully pushed the XNGP4.2.0 version, and the G9 and P7i Max models in Shanghai, Shenzhen, and Guangzhou, as well as the P5 series in the high-precision map coverage area of Shanghai, opened up point-to-point urban NGP. In areas where high-precision maps cannot cover, the enhanced LCC version with the ability to bypass lanes, recognize traffic lights, and pass through intersections directly has been opened. According to Xiaopeng’s official plan, urban NGP will expand to 50 cities by the end of 2023 and to 200 cities by 2024, striving to achieve “universal use” of urban navigation assistance driving. In April, Huawei officially released the ADS2.0 version and announced that urban NCA has been implemented in Shenzhen, Shanghai, Guangzhou, and other areas. Huawei originally planned to cover urban NCA in 45 cities in the fourth quarter of 2023. The latest development is that Huawei has increased this goal to “universal availability” by December of this year. In June, Ideal Auto pushed the first city NOA that does not rely on high-precision maps to early bird users. In September, Ideal Auto began pushing the beta version of commuting NOA to early bird users, which will first cover 10 cities including Beijing, Shanghai, Guangzhou, and Shenzhen. In July, NIO opened NOP+ intelligent assistance systems in major urban ring roads in cities such as Beijing and Shanghai, and announced plans to gradually implement urban intelligent driving in a “route-based” manner. In addition, the cooperation between Great Wall’s Haomo Zhihang, SAIC’s Zhiji, and the innovative company Momenta has also announced relevant “intelligent driving opening up the city” plans.

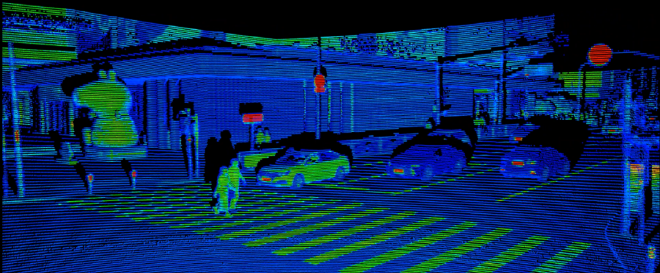

To achieve urban navigation assistance, the industry generally chooses the “heavy perception, light map” technical path of laser radar/BEV perception/Transformer model algorithm. Since NIO ET7 first introduced laser radar as a “label” for advanced intelligent driving, the multi-sensor fusion perception solution of laser radar + camera has become one of the mainstream directions of domestic research and development. Although laser radar has the problem of high cost, it has been proven to have more stable perception performance in complex urban traffic environments. Currently, models equipped with urban navigation assistance, such as Xiaopeng G6 Max, Avita 11, etc., have all implemented laser radar on board. Although laser radar is stable and accurate, its sparse point cloud perception information still cannot fully meet the needs of intelligent driving iterative upgrades. Therefore, domestic manufacturers have introduced BEV perception + Transformer model + Occupancy network grid technology, following the example of Tesla FSD. The former is based on camera perception of the surrounding environment, generating a bird’s eye view, integrating data information from different sensor inputs, and achieving alignment in spatial and temporal dimensions. The latter is responsible for processing and learning this perception information, training the overall ability of intelligent driving based on deep learning.

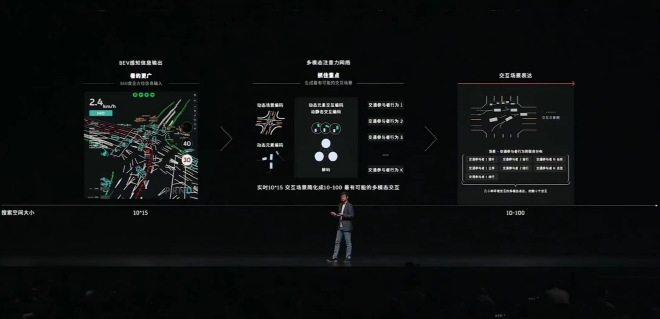

“Light map” emphasizes the application of the above technology. In areas not covered by high-precision maps, autonomous mapping is carried out in the vehicle based on the system’s own perception and learning capabilities to handle complex traffic environments in urban areas. According to information revealed by new energy vehicle manufacturers, although each manufacturer has a confusing array of terms for their own intelligent driving technology, they have not been able to escape the above-mentioned technological category: – XPeng: Creating a full-scenario intelligent driving architecture XBrain At XPeng Automobile’s 2023 1024 Tech Day, the company proposed the ultimate architecture XBrain for full-scenario intelligent driving. According to XPeng’s official statement, XBrain = XNet 2.0 + XPlanner + More. XNet 2.0 is a perception system with spatiotemporal understanding capabilities, integrating dynamic BEV, static BEV, and occupancy networks. This allows the XPeng XNGP intelligent driving system to deeply learn various large and irregular intersections, increasing the perception range by 200% and adding 11 new types of perception, including new types such as street sweepers, unmanned motorcycles, and unmanned bicycles. XPlanner is a planning and control system based on neural networks, characterized by long time sequences, multiple objects, and strong reasoning. It continuously iterates the system based on models and data, adapts to changes in the surrounding environment, and generates the optimal motion trajectory, enabling the system to achieve “human-like” gaming capabilities.

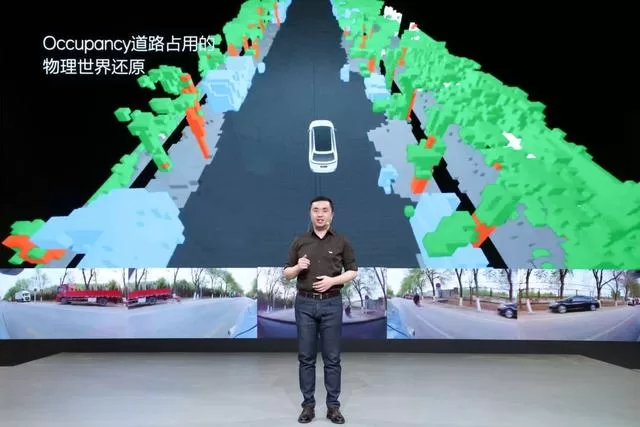

Ideal car “No Map” urban NOA three-piece set: BEV+Transformer+Occupancy At the Ideal Car Family Tech Day in May, the algorithm for urban NOA also mentioned the same technical concept: static BEV network, dynamic BEV network, Occupancy network, and enhanced the accuracy and details of the Occupancy network restored through NeRF technology to achieve a complete restoration of the physical world. Static BEV can perceive and construct road structures in real time, equivalent to mapping dynamically while driving, solving the real-time problem of high-precision map data, and the perceived features also better meet the needs of autonomous driving. Dynamic BEV overcomes the occlusion and cross-camera problems that traditional vision cannot solve, and can accurately identify the position and speed of vehicles when they pass through multiple cameras. Even if the field of view is blocked, it can still “mentally supplement” the surrounding environment for stable perception, similar to human thinking and judgment. For the recognition of general obstacles, Ideal processes it through the Occupancy network. Occupancy can build a virtual world that corresponds completely to the real world, identifying objects that do not belong to the road and traffic participants in the real environment, such as trash cans and temporary construction signs on the road. By consolidating the perception results of the three technologies into the prediction model, real-time output of the future trajectory prediction of all traffic participants in the surrounding area for the next few seconds can be achieved. This prediction result will be dynamically adjusted to provide more accurate information for subsequent decision planning.

NIO: Open routes, share routes, and connect routes into a network Compared to other competitors who are vigorously promoting the goal of smart driving “opening cities,” NIO began collecting users’ “navigation route wish lists” on the NIO APP at the end of September. Subsequently, based on operational plans and road conditions, NIO will gradually open NOA functions for users on their desired routes in urban areas. Based on the information revealed at NIO’s Innovation Technology Day this year, the underlying logic of this gradually “generalized” system is similar to that of two other new energy vehicle manufacturers: Handling more complex urban static topological environments through the NIO NAD Lane 2.0 network structure based on BEV perception + Transformer model. Creating a cloud-based large model through data-driven, using a spatiotemporal interaction Transformer multimodal attention network to continuously improve vehicle perception performance. At the decision-making level, using a spatiotemporal interaction Transformer multimodal attention network to screen scenes and find more suitable paths and driving decisions in complex urban traffic scenarios, achieving “human-like driving.”

Therefore, domestic host manufacturers and R&D institutions are tending to be consistent in the research and development path of urban intelligent driving: In terms of perception solutions, a fusion solution of laser radar perception and camera visual perception is adopted. BEV visual perception is the main, and laser radar perception serves as a “blind spot” to improve the overall safety and stability of the system. At the system algorithm level, gradually transitioning from the “artificial rule” driven traditional L2 system to AI artificial intelligence autonomous decision-making, based on the Transformer model, allowing the intelligent driving system to “learn to drive” through neural networks and machine learning, rather than driving according to “fixed rules”. In this way, on the one hand, the system can adapt to more complex urban traffic environments, and on the other hand, it can gradually weaken the limitations of high-precision maps, moving from “light maps” to “no maps”. This technological path hides the competition of data volume and data processing capability: the accumulation of data volume, the quality of data learning, will directly affect the system’s capability. Therefore, major manufacturers are all strengthening the construction of their own data closed-loop system, preparing for the upgrade and iteration of system performance. This is also why the “city opening plan” announced by manufacturers is gradually being opened, and in many cities that have already announced “city opening”, users complain that “opening a road is also difficult to use in many places” – even if the functionality is already “supported”, the performance of the intelligent driving system in urban scenarios still far from meets user expectations.

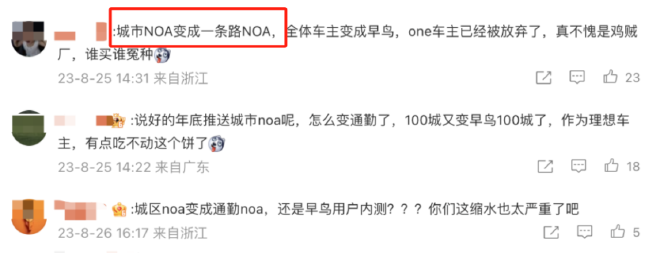

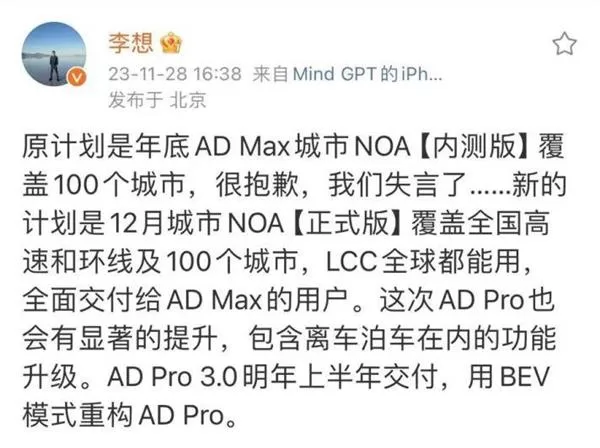

For users, it’s more important to focus on which special scenarios will have practical smart features in the future, rather than the number of “open cities” announced by the manufacturers. Exploring more special scenarios The technical path is relatively clear, but there is still a long way to go for advanced intelligent driving systems to go from “usable” to “user-friendly”. Overly ambitious plans ultimately need to recognize the realistic development logic. For example, at the Shanghai Auto Show in April of this year, Ideal Car officially announced that NOA will be implemented in 100 cities this year. By the Chengdu Auto Show in August, Ideal changed its promotional message from “city NOA” to “commute NOA” – only achieving NOA navigation assistance on a limited route, which reduces the complexity compared to the more demanding full city NOA. Even for the lower difficulty commute NOA, Ideal did not open it to all car owners, but only to specially recruited “early bird users”. The latest news is that Li Xiang has officially apologized through Weibo: the plan to cover 100 cities with city NOA by the end of the year is difficult to achieve, and the latest plan is to cover the national highways and ring roads and 100 cities by December – Li Xiang did not explicitly point out in his Weibo that many of these highways and ring road sections may still rely on high-precision map support.

Therefore, for users, the full landing of the “large and comprehensive” global urban area navigation assistance may still take some time, and the “few and refined” advanced intelligent driving functions in specific scenarios may be more worth looking forward to. For example, the mechanical parking assistance demonstrated by Huawei at the Smart World S7 launch event should be more helpful to users in cities such as Shanghai with more “difficult scenarios.” And NIO’s high-speed service area navigation and battery swapping function for car owners is expected to solve the pain point of “difficult to find” service area battery swapping stations, and improve the overall travel experience of NIO car owners.

High-level intelligent driving is destined to be a track that requires heavy investment and a long cycle. Taking Huawei as an example, in July last year, Yu Chengdong talked about the Huawei car BU business at the China Automobile Blue Book Forum, saying that it would take more than a billion dollars to invest in the business for a year, with direct investment of 7,000 people and indirect investment of more than 10,000 people, 70% of whom are research and development personnel working on intelligent assisted driving. So, under such high investment, how can a healthy business model be formed? When will the era of intelligent driving’s iPhone 4 moment arrive? All of this still awaits time to give people the answers.