The debate over using pure vision or LiDAR in assisted driving is both old and new. In 2022, Tesla firmly backed the vision approach, while most domestic automakers opposed it. By 2024, some brands launched multiple LiDAR-equipped models and began shifting toward pure vision products. After March this year, it seems the tide has turned again, with the LiDAR camp gaining an advantage. This back-and-forth relates to the stages of technology mastery. Thus, we can expect this discussion to continue until clear technological barriers disappear. Four Stages of Machine Learning First, clarify that there is no direct competition between “pure vision and LiDAR.” No vehicle relies solely on LiDAR without cameras. LiDAR serves a supportive role in positioning. Therefore, the proper comparison should be pure vision versus vision plus LiDAR. At first glance, the latter’s sensor combination adds a “helper,” akin to Erlang Shen with three eyes, which might enhance perception. However, the issues are far more complex than they appear.

Initially, Waymo aimed to develop Level 4 and Level 5 autonomous vehicles. Their test cars featured expensive mechanical lidar, costing up to $100,000. This price exceeded that of most cars on the road. The issue wasn’t just the camera’s capability; the algorithms still operated at the “expert system” level. They used fixed knowledge and rules for the machines to execute. Later, they advanced to “feature engineering.” This process involved extracting features for the machine to learn. It resembled human driving, as humans are sensitive to changes like color, shape, size, and position. These changes significantly impact attention. The attention mechanism inspired AI architecture. People simplify the changing scenes outside the car into “drivable” and “non-drivable” states. They then apply common sense and traffic rules to determine driving behavior. In the third phase, “machine learning,” they fed raw data and a few labels to the machine, allowing it to learn features independently. During this stage, AI made remarkable progress, surpassing humans in image recognition and classification. At this point, Tesla invented an algorithm called “Occupancy Network.” It virtually divided the 3D space along the motion path into numerous small cubes. If a cube is occupied, it distinguishes between moving and non-moving objects. This approach avoids the previous limitation of needing to identify an object before responding. Tesla once faced negative cases involving non-structural obstacles, such as overturned trucks and sudden appearances of cows. This technology relies on Tesla’s pure vision approach. Musk argues that if humans can drive with two eyes, pure vision should work fine. This argument misrepresents the concept. Machines have not yet reached the fourth stage, where they can perceive and understand the world like humans. They cannot learn and adapt in nearly all environments, which is essential for achieving “general artificial intelligence.” Therefore, pure vision currently falls short of human capability. Pure vision lacks the effectiveness of human eyes, and the issue lies in the brain. Under these conditions, discussing the speed limit of AEB offers little value; it often serves as marketing jargon. Pure vision’s inferiority to human ability is no longer just an “eye” issue. A human brain is born with a model framework, but it contains very little data. For instance, a three-month-old baby already has visual capabilities. When shown a video of a snake, the baby displays clear signs of distress—pupil constriction, stiff body language, and crying. This reaction stems from a few retained parameters. Most parameters are learned later, during which humans prune many inactive neural connections. The cost is the loss of long-term memory during that time. Compared to human abilities, intelligent machines struggle to predict all potential consequences of their actions. Their behavior often shows “unexplainable” phenomena due to a lack of human experience. No formal method can establish models for all objects and behaviors, such as how to interact and cooperate with other agents and anticipate outcomes. Machine intelligence still has significant flaws that training alone cannot resolve.

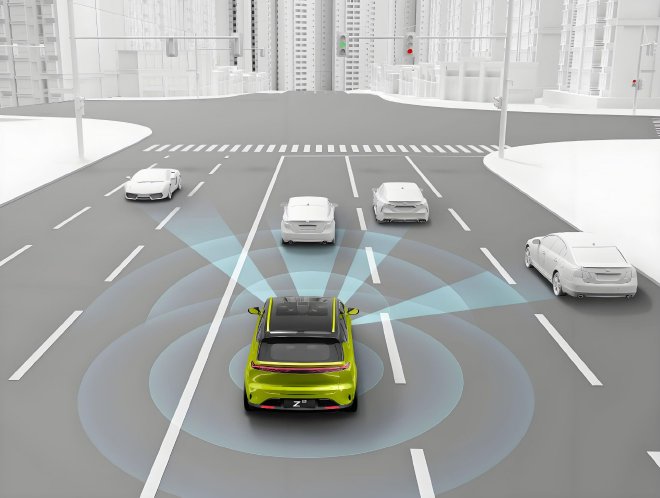

End-to-end intermediate results often lack explanation. We impose strict rules to manage these uncontrollable possibilities. For example, we instruct machines never to run a red light. However, ambulances and fire trucks can run red lights if it is safe. To avoid complicating rules, we limit application scenarios. Cameras have improved their ability to handle bright light, rapid changes in illumination, low light, and obstructed views. Yet, the big issue lies in the mind. Don’t expect pure vision to replace humans at this stage. Is LiDAR a good assistant? At this point, external tools become useful again. Weak prediction capability is not a problem. The real world is three-dimensional, while pure vision projects that 3D world. LiDAR directly measures the missing information dimensions. Vision passively receives light signals, which can be uncontrollable. The human eye faces this issue too. The same car may look completely different at night and during the day. LiDAR actively emits light, unaffected by visible light. Vision perceives color and brightness, while LiDAR detects contours. For the same car, the shape is often more stable than color and brightness, which vary with light. Theoretically, LiDAR data is more reliable. However, while LiDAR costs have decreased, its flaws relate to its active operation. At greater distances, the laser’s divergence angle increases, and energy density drops quickly.

Current technology shows that under good lighting, a 192-line LiDAR provides less information on objects beyond 200 meters than an 8-megapixel camera can with pure visual algorithms. The combination of vision and LiDAR requires significant computational power to process point cloud and image fusion data. As a result, the recognition ability falls short compared to pure vision. A leading technology executive tells us that, contrary to popular belief, LiDAR is very sensitive to weather. Light snow does not significantly obstruct visibility, but small translucent particles can create noise just a few meters from the LiDAR. These particles complicate detection of snowflakes, which should be ignored. Millimeter-wave radar can truly ignore various extreme weather conditions. Its longer wavelength allows for better diffraction. However, this also means that millimeter-wave radar has disappointing accuracy and struggles with precise distance measurement. In practical applications, LiDAR scans many objects, generating numerous echoes. Signals overlap, complicating recognition. LiDAR’s frame rate does not match that of cameras, especially for high-speed objects at long distances. This leads to greater errors compared to cameras. The issue lies in computational power. LiDAR has high information density but also contains much useless information, demanding more processing power. Due to these shortcomings, LiDAR cannot stand alone. It serves only as a supplementary tool for blind spots. The question then becomes whether it is worth using LiDAR solely under special conditions. Special conditions include low light, simple road conditions, and high-speed driving. In these situations, cameras cannot see far, but the system needs a long “takeover window.” Lidar serves as a good blind spot solution. In such scenarios, drivers relying solely on visual aids have two options. They can use assisted driving and slow down, allowing 5 to 10 seconds for a possible takeover. Alternatively, they can drive manually and avoid assisted driving. Lidar can address similar dilemmas, but it costs an additional 10,000 to 20,000. Drivers must choose wisely. Rational drivers will avoid putting themselves in dangerous situations. Lidar can indeed offer greater freedom in certain scenarios. However, in extreme weather, when most people hesitate to travel, it’s best to pull over and wait rather than rely on assisted driving. Considering both solutions with different adaptability and costs leads to complex limitations. As technology evolves, conclusions may change. For instance, if computing power becomes cheap, lidar’s cost may become less relevant. Filtering algorithms might resolve various fusion issues, increasing the range of extreme scenarios they can handle. In the future, other sensors may emerge, providing broader visual and 3D measurement capabilities at lower costs, but we do not see that yet. Note: Some images are sourced from the internet. Please contact for removal if there is any infringement.